The architecture of computing has remained fundamentally unchanged for nearly a century, relying on the classical bit, which exists in a state of either 0 or 1.

This foundational structure, while responsible for the digital revolution, is now encountering its ultimate physical limits, famously defined by Moore’s Law slowdown.

The next great technological frontier—and the source of the most profound disruption—is Quantum Computing.

This revolutionary paradigm harnesses the bizarre and powerful laws of quantum mechanics to process information in ways that are exponentially faster and fundamentally different from anything possible with classical machines.

The transition from classical bits to quantum bits, or qubits, is not merely an upgrade; it is a “quantum leap” that promises to redefine industries, crack modern cryptography, and unlock solutions to humanity’s most complex scientific and engineering problems.

This extensive analysis delves into the core principles that grant quantum computers their immense power, dissects the competing physical technologies striving for dominance, explores the transformative real-world applications across various sectors, examines the existential threat to cybersecurity, and charts the necessary strategic roadmap for businesses and governments navigating this imminent computational revolution.

The Physics of Power: Understanding Qubits and Quantum Mechanics

The exponential power of quantum computing is derived from three core quantum phenomena that allow a quantum computer to explore billions of possibilities simultaneously.

1. Qubits: The Quantum Bit

Unlike a classical bit, which must be either 0 or 1, the quantum bit, or qubit, can exist in a superposition of both states simultaneously.

A. Superposition

This principle means a single qubit can represent a vast range of outcomes, not just two. If you have qubits, the system can represent states simultaneously.

For example, a modest 300-qubit machine could represent more states than there are atoms in the observable universe.

This massive parallelism is the primary source of the quantum computer’s speed advantage for certain problems.

B. Entanglement

This is arguably the most counter-intuitive quantum phenomenon. Two or more qubits become linked in such a way that they share the same fate, regardless of the physical distance separating them.

Measuring the state of one entangled qubit instantaneously determines the state of the other.

Entanglement is crucial because it allows the information processing capacity of the qubits to scale exponentially, connecting them into a single, cohesive computational unit.

2. Quantum Tunneling and Coherence

The maintenance of the quantum state requires extreme environmental control.

A. Coherence

Qubits are extremely fragile. They maintain their delicate quantum state (superposition and entanglement) only for fleeting moments.

Any interaction with the external environment—heat, vibration, stray electromagnetic fields—causes the qubit to lose its quantum nature and revert to a classical state.

This loss of quantum integrity is called decoherence, and managing it is the single greatest engineering challenge in quantum computing.

B. Quantum Tunneling

This concept allows particles to pass through an energy barrier even if they do not classically possess enough energy to overcome it.

While not directly involved in qubit storage, understanding this non-classical behavior is fundamental to developing the quantum algorithms that exploit non-classical probabilities to find solutions.

3. Quantum Algorithms (The Software)

The exponential speedup is achieved only by running specific algorithms designed to harness quantum properties.

A. Shor’s Algorithm

The most famous and disruptive quantum algorithm. It can find the prime factors of large numbers exponentially faster than classical computers.

This capability is the existential threat to modern public-key cryptography (like RSA and ECC), which relies entirely on the classical difficulty of factoring large numbers.

B. Grover’s Algorithm

This algorithm provides a quadratic speedup for searching unsorted databases compared to classical search methods.

While not an exponential speedup, it significantly enhances the efficiency of optimization problems, machine learning searches, and code-breaking attempts on symmetric encryption (like AES).

C. Quantum Phase Estimation (QPE)

A critical subroutine used in many complex applications, including calculating the energy levels of molecules for chemistry and materials science, where classical simulation is currently impossible.

The Hardware Race: Competing Qubit Technologies

Building a stable, scalable qubit is an enormous engineering feat. Several distinct physical implementations are vying to become the industry standard.

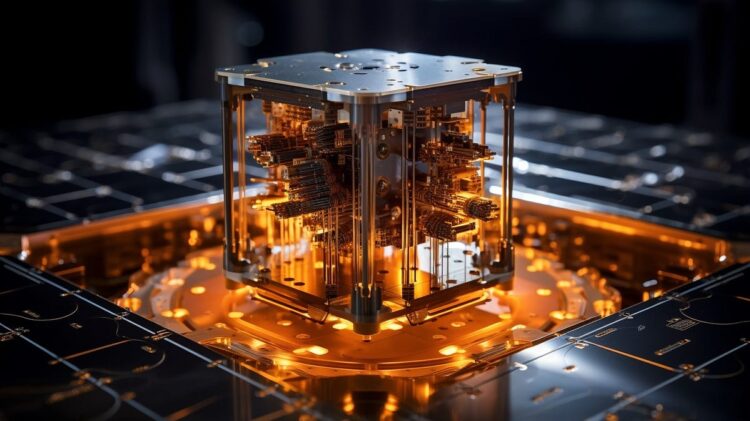

1. Superconducting Qubits (The Current Leader)

This technology is the backbone of systems developed by Google and IBM, currently leading the charge in raw qubit count.

A. Physical Mechanism

Qubits are created using tiny electrical circuits made of superconducting materials (e.g., aluminum) cooled to near absolute zero ( or ). At this temperature, the circuits exhibit quantum behavior.

B. Advantages

They are highly scalable using established semiconductor manufacturing techniques and can be controlled using standard microwave pulses.

C. Disadvantages

The necessity of massive, complex, and expensive dilution refrigerators to maintain the ultra-low temperatures creates significant operational overhead and limits portability.

2. Trapped Ion Qubits (The Precision Alternative)

Companies like IonQ utilize trapped ions, which offer exceptionally high quality and stability.

A. Physical Mechanism

Qubits are individual atoms that are suspended and held in place by electromagnetic fields (laser traps) in a vacuum chamber. Lasers are used to manipulate and read the quantum states.

B. Advantages

Trapped ions boast the highest coherence times (how long the qubit remains stable) and the highest gate fidelity (accuracy of operations) of any current technology, resulting in lower error rates.

C. Disadvantages

Scaling is challenging. As the number of ions increases, manipulating and isolating individual ions becomes significantly more complex.

3. Photonic Qubits (The Optical Approach)

This method uses photons (particles of light) as the carriers of quantum information.

A. Physical Mechanism

Qubits are encoded in the polarization or timing of individual photons, manipulated using optical components like beam splitters and mirrors.

B. Advantages

Photons operate at room temperature, eliminating the need for complex refrigeration, and are ideal for transmitting quantum information over long distances (quantum networking).

C. Disadvantages

Control and entanglement are difficult. It is hard to make photons interact reliably, and the technology often requires high photon throughput to overcome the inherent randomness of light.

Transformative Applications: Beyond Classical Limits

Transformative Applications: Beyond Classical Limits

Quantum computers are not replacements for classical PCs; they are accelerators designed to tackle problems that are fundamentally intractable for even the most powerful supercomputers.

1. Materials Science and Chemistry

This is often cited as the killer application for early quantum computers.

A. Molecular Simulation

Classical computers can only simulate the quantum behavior of very small molecules. Quantum computers can accurately model the complex interactions of electrons in large molecules, leading to the discovery of highly efficient catalysts, new stable battery materials, and superconductors.

B. Drug Discovery

Accurate simulation allows researchers to model how new drug compounds will interact with complex proteins, drastically accelerating the identification of viable drug candidates and potentially revolutionizing pharmaceutical research.

2. Finance and Optimization

The ability to process vast, high-dimensional datasets makes quantum computing highly attractive to the financial sector.

A. Portfolio Optimization

Quantum algorithms can analyze complex financial markets and optimize investment portfolios under various, evolving constraints (risk tolerance, liquidity, regulatory limits) far more effectively than classical models.

B. Fraud Detection

Enhanced machine learning capabilities allow for the rapid identification of subtle, complex patterns indicative of fraud in real-time transaction streams, improving the accuracy and speed of financial security.

C. Risk Analysis

Quantum techniques can accurately model complex financial derivatives and catastrophic tail risks, providing more robust methods for bank solvency and systemic risk assessment.

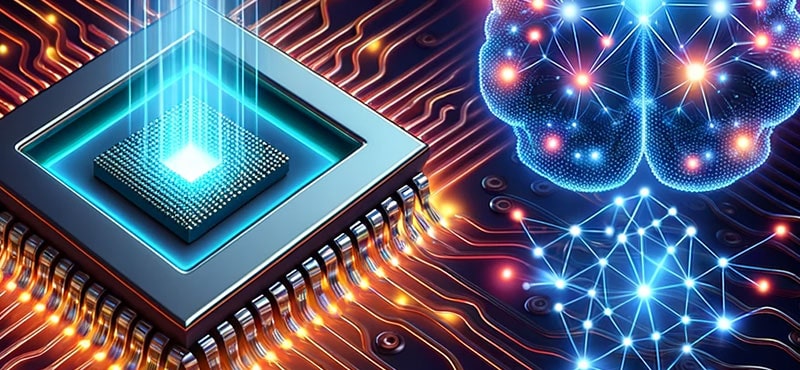

3. Machine Learning and Artificial Intelligence (Quantum AI)

Quantum computation promises to enhance the speed and capability of machine learning models.

A. Quantum Machine Learning (QML)

Applying quantum principles to accelerate computationally intensive steps in AI, such as training neural networks or reducing the dimensionality of complex data sets, potentially leading to faster and more efficient AI.

B. Big Data Processing

Quantum search and optimization algorithms can accelerate the processing of massive datasets, improving the speed of predictive modeling in everything from climate science to logistics.

The Existential Threat: Quantum Cryptography and Security

The most immediate and disruptive implication of quantum computing is the ability to break the security protocols that currently protect the internet, financial transactions, and military secrets.

1. The Post-Quantum Cryptography (PQC) Transition

The threat posed by Shor’s Algorithm has spurred a massive, globally coordinated effort to develop and standardize new, quantum-resistant encryption.

A. NIST Standardization

The U.S. National Institute of Standards and Technology (NIST) has led a global competition to select the best PQC algorithms that can run on classical hardware but are mathematically resistant to quantum attacks.

Leading candidates include lattice-based cryptography (like CRYSTALS-Kyber for key exchange) and hash-based signatures.

B. Cryptographic Agility

Organizations must develop “crypto-agility”—the ability to quickly switch between different cryptographic algorithms—to prepare for the PQC transition and to manage the risk of flaws being found in the new quantum-safe standards.

2. Quantum Key Distribution (QKD)

A completely different approach to security, QKD uses quantum physics itself to guarantee secure communication.

A. Unbreakable Security

QKD relies on the principle that observing a quantum state (like a qubit used to transmit an encryption key) inevitably destroys or alters that state. This means any eavesdropper attempting to intercept the key is immediately detectable, making the key exchange process unconditionally secure.

B. Limitations

QKD relies on transmitting photons and is currently limited by distance and the need for dedicated fiber optic lines (or line-of-sight in space), making it practical for specific high-security links rather than the general internet.

Strategic Imperatives: Navigating the Quantum Future

Strategic Imperatives: Navigating the Quantum Future

The quantum transition is a long-term strategic challenge requiring immediate preparatory steps, particularly concerning data security.

1. Data Security and the HNDL Threat

The “Harvest Now, Decrypt Later” (HNDL) threat is the most urgent security concern.

A. Inventory High-Value Data

Organizations must immediately identify and inventory all data that requires confidentiality for more than 5-10 years (e.g., trade secrets, IP, sensitive personal records). This data is currently being harvested by malicious state actors awaiting a quantum computer.

B. Immediate PQC Retrofitting

High-value, long-term data must be secured now using PQC algorithms or a hybrid approach, even if the quantum computer is years away, to neutralize the threat posed by current data harvesting.

C. Quantum Risk Assessment

Perform a comprehensive audit to understand which assets, services, and communications rely on vulnerable classical cryptography and establish a timeline for PQC migration.

2. Talent Development and Investment

The quantum field is severely limited by a shortage of specialized human capital.

A. Upskilling and Education

Invest in training current software engineers, physicists, and mathematicians in the principles of quantum information science and quantum programming languages (e.g., Qiskit, Cirq).

B. Strategic Partnerships

Form collaborations with universities, government labs, and leading quantum computing companies to gain early access to hardware and talent, ensuring the organization is prepared to integrate quantum capabilities when they become mature.

C. Develop Quantum Use Cases

Begin small pilot projects utilizing quantum simulators (classical software that mimics quantum behavior) to identify specific optimization, simulation, or AI problems within the organization that could yield a competitive advantage with quantum speedup.

Conclusion

Quantum computing represents not an evolution, but a true revolution in information processing. By harnessing superposition and entanglement, the qubit unlocks a computational power that defies classical intuition and promises exponential speedups for a specific class of complex, high-impact problems.

While challenges remain formidable—particularly managing decoherence and scaling the qubit count—the strategic imperative is clear: the quantum era is imminent.

The race to develop stable quantum hardware and the parallel effort to implement Post-Quantum Cryptography are two sides of the same technological coin.

For businesses and governments, the next decade is the critical window to transition security protocols and build the foundational knowledge necessary to survive the cryptographic threat and thrive in the new dawn of quantum-accelerated innovation.

The future of computation is quantum, and it is arriving much sooner than previously anticipated.