In the rapidly evolving digital landscape, Artificial Intelligence (AI) has emerged as a double-edged sword: a powerful tool for innovation and a formidable weapon in the hands of malicious actors. As organizations increasingly rely on vast, interconnected data ecosystems, they face sophisticated, automated threats driven by AI and Machine Learning (ML). These AI threats are fundamentally different from traditional, human-operated attacks; they are faster, more evasive, and capable of operating at a scale that defeats conventional defenses. Protecting sensitive data—the lifeblood of any modern enterprise—against these intelligent adversaries is no longer a matter of simply building higher walls but designing an adaptive, AI-informed defense strategy.

This in-depth guide provides a comprehensive analysis of the emergent AI threats, details the new vulnerabilities they exploit, and outlines a robust, multi-layered security framework essential for fortifying your data in the age of intelligent cyber warfare.

The New Threat Landscape: AI-Powered Attacks

AI-driven cyberattacks leverage machine learning to automate the most time-consuming and complex stages of a breach, moving beyond simple brute-force techniques to highly targeted, personalized, and efficient campaigns.

A. Automated Reconnaissance and Phishing

AI excels at gathering and analyzing massive amounts of public and leaked data to create hyper-realistic, personalized attacks.

- Spear-Phishing at Scale: Generative AI models (like advanced LLMs) can craft flawless, context-aware phishing emails that mimic specific internal communications, complete with correct jargon, company letterheads, and knowledge of internal projects. This bypasses traditional spam filters and human suspicion.

- Vulnerability Mapping: ML algorithms continuously scan public repositories, corporate websites, and network footprints to automatically identify security weaknesses, prioritize the most exploitable flaws, and map ideal infiltration routes before a human attacker ever intervenes.

- Deepfake Social Engineering: AI-generated voice or video (deepfakes) can be used to impersonate executives or trusted colleagues during live video calls or voice chats to coerce employees into revealing credentials or transferring funds (Vishing and Deep-Vishing).

B. Polymorphic Malware and Evasion

Traditional security systems rely on signature-based detection (identifying known malware code). AI-powered malware renders this defense obsolete.

- Self-Modifying Code: AI generates polymorphic malware that constantly changes its underlying code signature with every infection, making it appear as a new, unknown threat to conventional antivirus systems.

- Adaptive Sandbox Evasion: ML-powered malware can detect when it is being run inside a security “sandbox” (a controlled testing environment). It can then modify its behavior, remaining dormant or performing benign actions until it confirms it has reached a real, live target system.

- Zero-Day Exploitation Prediction: Advanced AI is being developed to analyze code patterns and rapidly identify logic flaws or weaknesses in new software releases before the manufacturer is even aware of the vulnerability—a sophisticated form of zero-day exploitation.

C. Adversarial Machine Learning Attacks (AML)

A unique and potent class of AI threat targets the very ML models that organizations use for everything from fraud detection to facial recognition.

- Data Poisoning: Attackers intentionally feed corrupted or misleading data into an organization’s training dataset, causing the target ML model to learn incorrect patterns, leading to biased or inaccurate outcomes (e.g., teaching an intrusion detection system to ignore malicious activity).

- Model Evasion: Creating subtle, imperceptible alterations (called adversarial examples) to input data (e.g., adding a few non-visible pixels to an image or altering a sentence structure slightly) that cause a security model to misclassify a dangerous input as harmless.

- Model Extraction: Stealing proprietary ML models by querying them repeatedly and reverse-engineering the logic, allowing the attacker to understand the model’s blind spots and design specific evasion techniques.

The Adaptive Defense Framework: AI for Protection

To counter intelligent threats, defenses must be equally intelligent. Cybersecurity must transition from reactive, perimeter-focused protection to proactive, AI-driven defense-in-depth.

A. Leveraging AI for Anomaly Detection

The first line of AI defense is using ML to spot activity that deviates from the established norm—the tell-tale sign of a sophisticated intrusion.

- Behavioral Analytics: AI learns the “normal” behavior of every user, device, and application on the network (e.g., typical login times, file access patterns, and data volume transfers). Any significant deviation—a user suddenly accessing an unusual database or transferring massive files late at night—is flagged instantly, often before the breach can escalate.

- Zero-Trust Contextualization: Instead of trusting users inside the perimeter, AI continuously assesses the risk of every access request based on context (device health, geolocation, time of day). If the risk score exceeds a threshold, access is automatically revoked or limited.

- Network Traffic Analysis (NTA): ML models analyze metadata and flow records in real-time, identifying unusual network tunneling or encrypted communication patterns characteristic of malware command-and-control (C2) channels.

B. Automated Incident Response (SOAR)

The speed of AI attacks demands that the time between detection and response is measured in seconds, not hours.

- Security Orchestration, Automation, and Response (SOAR): AI-powered SOAR platforms automatically execute multi-step playbooks when a threat is detected. For instance, if malware is found: the platform can automatically isolate the infected endpoint, block the malicious IP at the firewall, force password resets for the compromised user, and notify the security team.

- Pre-Emptive Threat Hunting: AI actively hunts for subtle indicators of compromise (IOCs) within the network that humans might miss, such as minor changes in system registries or dormant communication scripts.

- Deception Technology: Utilizing AI to create realistic, fake network decoys, honeypots, and deceptive data traps. When an attacker (or their automated AI tool) interacts with these decoys, the system gains crucial intelligence on the attacker’s tactics while containing the threat within a controlled environment.

C. Strengthening the Data Foundation

The ultimate goal of most cyberattacks is the data itself. Protection must be applied at the data layer, regardless of location (cloud, on-premise, or endpoint).

- Data Classification and Labeling: Implementing AI tools to automatically classify data based on sensitivity (e.g., PII, confidential, public) and apply appropriate, granular access controls and encryption policies.

- Homomorphic Encryption (HE): A revolutionary cryptographic method that allows AI or cloud services to perform computation and analysis on encrypted data without ever decrypting it. This ensures that the data remains protected even if the processing environment is compromised.

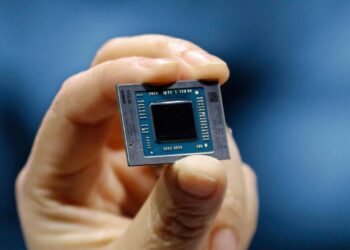

- Confidential Computing: Utilizing hardware-based security features (like Trusted Execution Environments or TEEs) to encrypt data while it is in use within the CPU’s memory, shielding it from the OS, hypervisor, and even cloud administrators.

The Human Factor and Policy Oversight

The Human Factor and Policy Oversight

Technology alone cannot secure data. A robust defense requires educated personnel and clear, enforceable policy frameworks that address the ethical and legal ambiguities of AI warfare.

A. Training for AI-Awareness

The human employee remains the most vulnerable entry point. Training must be updated to address the sophistication of AI-generated attacks.

- Deepfake Recognition Drills: Conducting internal exercises that expose employees to highly realistic, but fake, internal communications (voice, video, and email) to train their critical judgment against synthetic media.

- Contextual Phishing Training: Moving past generic phishing simulations to highly personalized, AI-generated phishing drills that mirror the complexity of real-world threats.

- Code and Model Auditing: Training development teams on security best practices for AI/ML development, including rigorous auditing of training datasets for poison and validating the robustness of models against adversarial input.

B. Ethical AI and Bias Mitigation

The AI used for defense must be fair, transparent, and compliant with privacy regulations (like GDPR or CCPA).

- Bias Auditing: Continuously auditing the defensive AI models to ensure they do not exhibit bias (e.g., disproportionately flagging activity by certain demographic groups), which could lead to unfair access denials or compliance violations.

- Transparency and Explainability (XAI): Ensuring that the security AI can provide clear explanations for its decisions (e.g., “The system flagged this transaction because the user logged in from a new country and is attempting to access sensitive PII”), allowing human analysts to validate its judgment and build trust.

C. Regulatory and Policy Frameworks

Governments and regulatory bodies must adapt to govern AI’s dual-use potential.

- AI Risk Assessment: Requiring organizations to conduct mandatory, periodic risk assessments specifically focused on their exposure to AI-generated threats and the robustness of their AI defense systems.

- International Cooperation: Establishing global standards for ethical AI development and data sharing agreements among nations to combat sophisticated, state-sponsored AI cyberattacks, which often originate across borders.

- Liability and Accountability: Defining clear legal lines of responsibility when an automated AI system makes a destructive security error, clarifying whether the fault lies with the data, the model’s training, or the human operator.

Strategic Long-Term Data Fortification

Strategic Long-Term Data Fortification

For long-term resilience, data security must be integrated into the core architecture of the organization, moving beyond the traditional security operations center (SOC).

1. Data Mesh and Decentralized Access

Moving away from centralized data lakes to a data mesh architecture, where data is treated as a product and access control is decentralized. This means a breach in one area does not compromise the entire data ecosystem.

- Data Ownership: Empowering specific domain teams with ownership and direct responsibility for their data’s security and quality.

- Standardized Interoperability: Ensuring consistent, high-level security protocols and API standards govern how data is accessed across the entire mesh.

2. Quantum-Resilient Encryption

Though still an emergent threat, the advent of quantum computing (with algorithms like Shor’s) will instantly break most current public-key encryption. Organizations must begin the migration to Post-Quantum Cryptography (PQC) standards immediately.

- Crypto-Agility: Building IT systems that are “crypto-agile,” meaning the underlying cryptographic algorithms can be easily swapped out and updated without major system overhauls.

- Data Inventory: Prioritizing long-lived sensitive data (e.g., patient records, military secrets, proprietary formulas) that needs to be protected for decades and applying PQC standards to this data first.

3. Proactive AI Red Teaming

Organizations must proactively test their defenses using AI-powered attack simulations.

- Adversarial Red Teams: Utilizing specialized security teams equipped with the same generative AI tools used by attackers to launch automated, realistic breach attempts against the organization’s network and models.

- Continuous Automated Testing: Deploying AI-powered penetration testing tools that continuously probe the environment for new weaknesses introduced by routine updates or system changes, ensuring 24/7 security validation.

The battle for data security in the 21st century is a battle of wits between competing AIs. Victory will not go to the biggest budget or the largest firewall, but to the organization that can deploy the most adaptive, intelligent, and proactive defense system, turning the threat of AI into the ultimate shield for their most valuable asset: their data.